Midi Music Files in Python

- Description: Music generation using ChatGPT prompts to produce Python code to create .midi files.

- Software and Languages: Terminal, Apache

- Full Transcript: Located Here.

Generating MIDI Files with ChatGPT and Python for Integration into Reason

This project explored the exciting intersection of artificial intelligence, Python programming, and music production. Leveraging ChatGPT, I generated Python code to create .midi files, which were then imported into Reason, a digital audio workstation (DAW) renowned for its versatility in music creation.

What is MIDI?

MIDI (Musical Instrument Digital Interface) has been a cornerstone of electronic music since its introduction in the early 1980s. It is a universal protocol that enables electronic musical instruments, computers, and other audio devices to communicate and synchronize. Unlike audio files, MIDI does not contain actual sound but instead provides instructions like pitch, velocity, and timing, which can be interpreted by instruments or synthesizers.

The Role of ChatGPT

With the advent of large language models (LLMs) like ChatGPT, generating music sequences—such as drum patterns, chord progressions, or melodies—has become a reality. While ChatGPT does not produce .midi files directly, it excels at generating Python scripts that utilize libraries like Mido to craft MIDI data.

The Process

-

Generating Python Code:

- I provided prompts to ChatGPT to design specific musical patterns, including repeating drum beats, melodic sequences, and randomized chord structures.

- ChatGPT responded with Python code that utilized the

Midolibrary, a tool specifically designed for creating and manipulating MIDI data.

-

Setting Up the Environment:

- Since the code required

Mido, I ensured my Macintosh environment was properly configured: -

pip install mido pip install python-rtmidi - The

python-rtmidilibrary was crucial for real-time MIDI interactions, ensuring seamless file generation and playback.

- Since the code required

-

Executing the Code:

- Using Terminal, I ran the Python scripts generated by ChatGPT. These scripts output

.midifiles that were saved to my local machine. - Each file could then be previewed in a MIDI player or imported into Reason for further enhancement.

- Using Terminal, I ran the Python scripts generated by ChatGPT. These scripts output

-

Importing into Reason:

- Once imported into Reason, the MIDI files could be assigned to virtual instruments or synthesizers, enabling creative manipulation of soundscapes and arrangements.

- I explored layering these AI-generated patterns with other compositions, blending human creativity with machine-generated ideas.

Insights and Challenges

Advantages:

- The process revealed how AI can assist in music production by quickly generating foundational elements, saving time and inspiring creativity.

- It highlighted the adaptability of MIDI as a format, proving its relevance even in AI-assisted workflows.

Challenges:

- The generated Python code required some refinement to align with specific musical goals.

- Variations in output quality meant that some sequences needed manual adjustment to fit the intended vibe of the project.

Why This Matters

This project demonstrated the potential for AI and programming to revolutionize creative workflows. By bridging the gap between raw data generation (Python scripts) and sophisticated music production (Reason), I was able to create a hybrid approach that combined the best of both worlds: computational precision and human artistry.

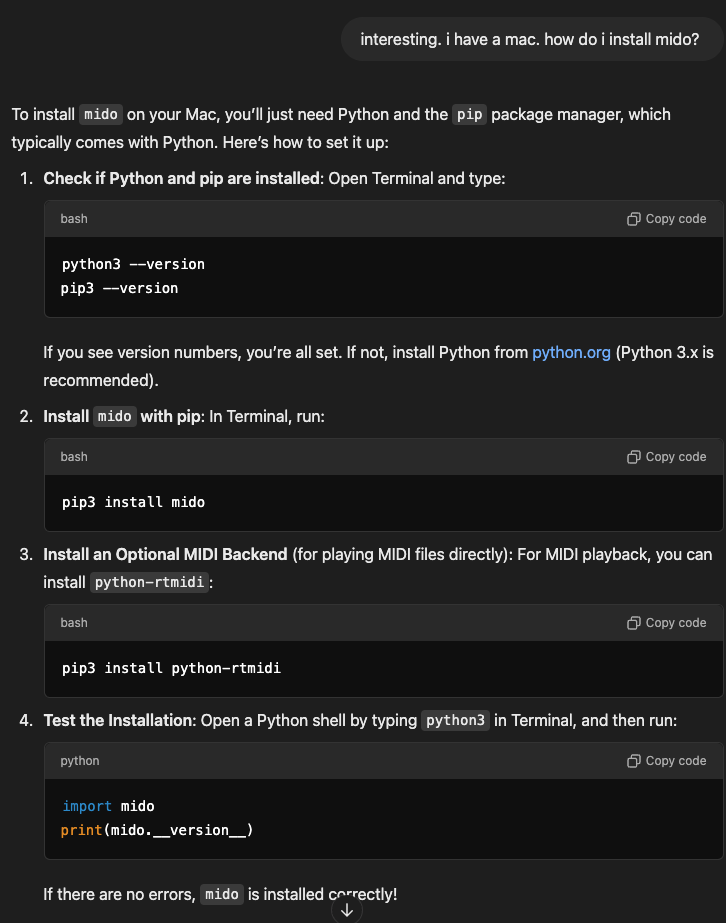

Instructions for Installing the Mido Library

- Open Terminal on your Macintosh.

- Run the following commands to install the required libraries:

-

pip install mido pip install python-rtmidi - Verify installation by running a simple Mido script to confirm everything is working correctly.

With these tools, the possibilities for AI-assisted music creation are endless. Whether it’s drum beats, complex melodies, or innovative chord progressions, combining ChatGPT, Python, and DAWs like Reason opens up exciting new frontiers for musicians and creators.

Here was that part of the conversation.

From there, I was able to produce a few melodies using parameters such as random number generators, specifying chords and chord changes, note lenths, tempo, referencing artistic style, and so forth. Here was a successful bit of code:

Example Code (python)

Prompt

okay, let's give it a try. we're going back to what i originally requested. a 120bpm sequence lasting 64 bars in which no 4 notes ever repeat in sequence, based on the style of aphex twin, also with no two notes in sequence being the same length.

from mido import MidiFile, MidiTrack, Message

import random

# Set up MIDI file and tracks

midi_file = MidiFile()

ambient_track = MidiTrack()

harmony_track = MidiTrack()

midi_file.tracks.append(ambient_track)

midi_file.tracks.append(harmony_track)

# Define constants

bpm = 60

seconds_per_beat = 60 / bpm # Each beat is 1 second

total_duration_seconds = 120 # 2 minutes

ambient_current_time = 0

harmony_current_time = 0

# Define note lengths in beats

note_durations = [1, 2, 4] # quarter, half, and whole notes

note_length_times = {

1: int(seconds_per_beat * 480), # quarter note (480 ticks per beat)

2: int(seconds_per_beat * 480 * 2), # half note

4: int(seconds_per_beat * 480 * 4) # whole note

}

# Define A minor scale notes (A3 to G5)

a_minor_scale = [57, 59, 60, 62, 64, 65, 67] # A, B, C, D, E, F, G in MIDI for octave 3

a_minor_notes = a_minor_scale + [n + 12 for n in a_minor_scale] # Extend to two octaves (A3 to G5)

# Generate the main ambient track with non-repeating notes in A minor

while ambient_current_time < total_duration_seconds:

# Select a random note from the A minor scale and a random duration

note = random.choice(a_minor_notes)

duration_beats = random.choice(note_durations)

duration_time = note_length_times[duration_beats]

# Add note on and off events for the ambient melody

ambient_track.append(Message('note_on', note=note, velocity=64, time=0))

ambient_track.append(Message('note_off', note=note, velocity=64, time=duration_time))

# Move time forward by the duration of this note

ambient_current_time += duration_beats * seconds_per_beat

# Harmonizing note setup: select a harmonizing note offset by a third, fifth, or octave

harmony_interval = random.choice([3, 7, 12]) # Minor third, fifth, or octave above

harmony_note = note + harmony_interval

if harmony_note > 79: # Keep within a reasonable range

harmony_note = note - harmony_interval

# Randomly offset start time of harmony notes for ambient delay effect

harmony_start_offset = random.randint(0, int(duration_time / 2)) # Offset start within half of main note duration

# Add note on and off events for the harmony track

harmony_track.append(Message('note_on', note=harmony_note, velocity=48, time=harmony_start_offset))

harmony_track.append(Message('note_off', note=harmony_note, velocity=48, time=duration_time - harmony_start_offset))

# Update harmony track time

harmony_current_time += duration_beats * seconds_per_beat

# Save the MIDI file

midi_file.save('ambient_pad_with_harmony_in_am_revised.mid')

Results

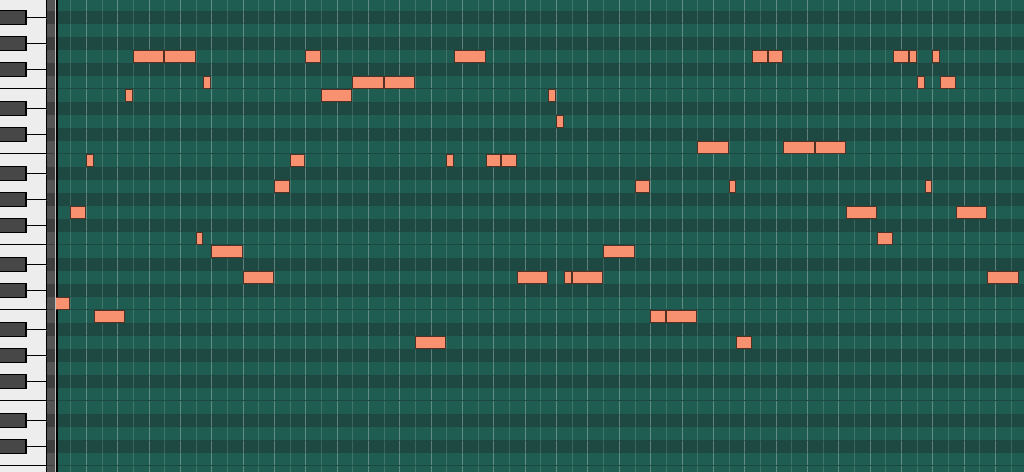

This resulted in the creation of a simple .MIDI file. I also asked it to come up with a harmony part, as well.

This is a screenshot of the .MIDI file it generated.